The easiest way to train an Object Detection model is to use the Azure Custom Vision cognitive service. That said, the Custom Vision service is optimized to quickly recognize major differences between images, which means it can be trained with small datasets, but is not optimized for detecting subtle differences in images (for example, detecting minor cracks or dents in quality assurance scenarios). If you need to go beyond what the cognitive service provides, you can train your own object detection neural network using transfer learning.

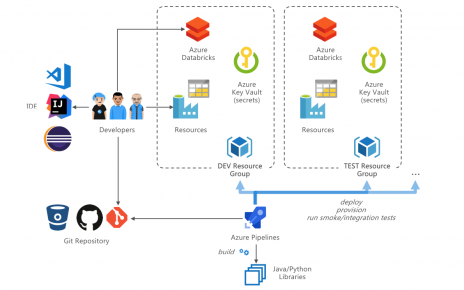

Using Azure Machine Learning with Deep Learning Virtual Machines provides the recommended DevOps infrastructure for running and operationalizing such deep learning projects. However, Azure Databricks is probably the easiest place to start and experiment, as it provides on-demand GPU machines, a machine learning runtime with TensorFlow included, and integrated notebooks. Spark is also a great platform for both data preparation and running inference (predictions) from a trained model at scale.

Although Azure Databricks is typically used to spin Spark clusters, you can also use it to spin a single node GPU ‘cluster’ with up to 4 local GPUs. In this article, we will run TensorFlow training locally on the driver GPUs (to scale even further, you can distribute training through the cluster using the the Databricks Horovod integration). We will then detect objects on image on our trained model, first using local TensorFlow on the driver, then in a distributed manner across Spark workers.

Table of Contents

Walkthrough

Setup

In Azure Databricks, create a new cluster. Select:

- Runtime: 5.4 ML (GPU)

- Worker Type: Standard_NC12

- Number of Workers: Min 0, Max 1

- Driver Type: Same as worker

Import notebook 010-install-init-script.ipynb. Run the notebook to create the init script that installs the TensorFlow Object Detection API and required libraries.

Edit the cluster configuration. Add the path to the newly created init script, and Confirm and Restart the cluster.

To validate the setup, import and run the notebook notebook 020-apply-pretrained-model.ipynb. It will download a pre-trained model “Single Shot MultiBox Detector with MobileNet” and run it on two images.

Transfer learning

We now proceed to training our own model. Import the notebook 030-transfer-learning.ipynb. We download the Oxford-IIIT Pet Dataset, containing 37 object categories (breeds of cats and dogs) with roughly 200 labeled images for each class, and proceed to retrain an Inception v2 model originally trained to detect objects in the COCO dataset (Common Objects in Context with 171 miscellaneous object categories such as airplanes and chairs).

The job configuration is preset to run for 200,000 steps, which takes about 13 hours on this node configuration, but you can also stop training early and shut down the cluster to save costs. While training is running, you can use the integrated TensorBoard view to check performance metrics and view how the model is doing on sample images.

To check results earlier, you don’t need to wait for training to complete, as the job saves a checkpoint file around every 10 minutes. Leave the training notebook running. Import the notebook 040-apply-transfer-model.ipynb and run it to test the latest checkpoint of your model against some sample images.

Distributed inference with Spark

Pandas UDFs are a very powerful and generic way to parallelize arbitrary data processing on Spark. Processing is done using standard Pandas code and can be very easily tested outside of Spark. At runtime, Apache Arrow is used behind the scenes. Arrow is an in-memory data format to efficiently transfer data between JVM and Python processes, which allows efficiently processing large amounts of Spark data in Python.

Import the notebook 050-apply-spark.ipynb. You can run this notebook while the transfer learning notebook is still running, since transfer learning uses the GPUs on the Spark driver node, while we will make use of the GPUs on the worker nodes. The notebook runs distributed prediction on 1000 images.

Next steps

You can experiment with a different model from the Model Zoo.

In our case we used an existing script to convert the Oxford Pet dataset to TFRecord files for training. If you have your own dataset, you could use Spark to process your data instead.