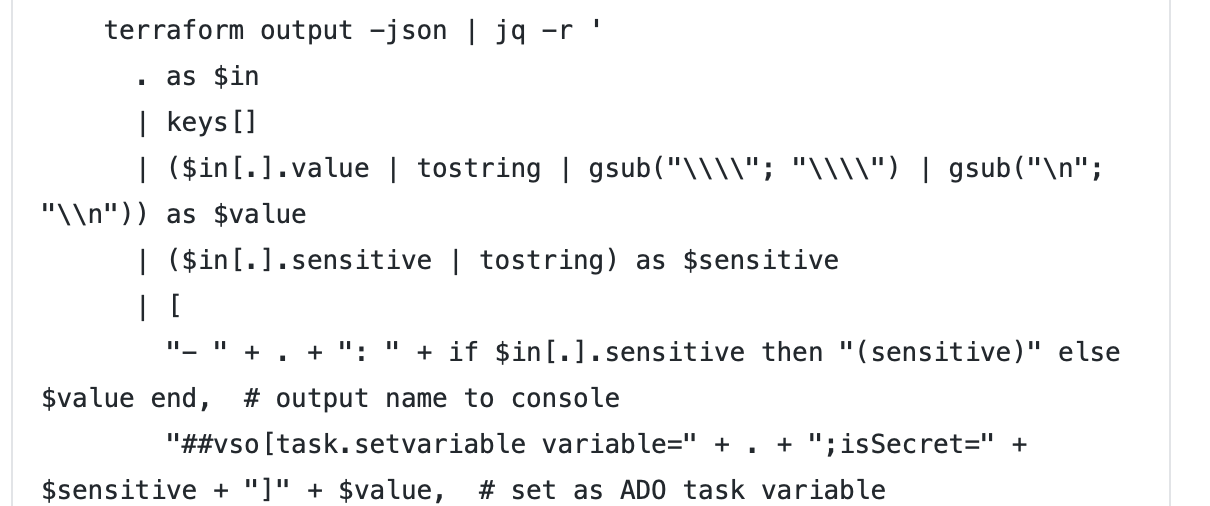

You can use Terraform as a single source of configuration for multiple pipelines. This enables you to centralize configuration across your project, such as your naming strategy for resources. When running terraform apply, the Terraform state (usually a blob in Azure Storage) contains the values of your defined Terraform outputs. In your output.tf: The Azure […]

Tag: devops

Provisioning Azure Databricks and PAT tokens with Terraform

See Part 1, Using Azure AD With The Azure Databricks API, for a background on the Azure AD authentication mechanism for Databricks. Here we show how to bootstrap the provisioning of an Azure Databricks workspace and generate a PAT Token that can be used by downstream applications. Create a script generate-pat-token.sh with the following content. […]

Using Azure AD with the Azure Databricks API

In the past, the Azure Databricks API has required a Personal Access Token (PAT), which must be manually generated in the UI. This complicates DevOps scenarios. A new feature in preview allows using Azure AD to authenticate with the API. You can use it in two ways: Use Azure AD to authenticate each Azure Databricks […]

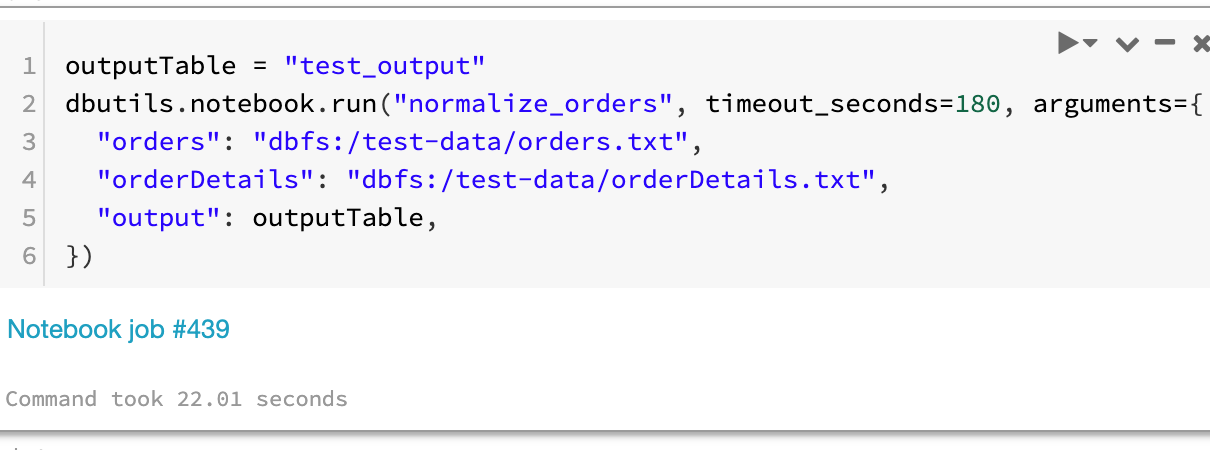

Unit testing Databricks notebooks

A simple way to unit test notebooks is to write the logic in a notebook that accepts parameterized inputs, and a separate test notebook that contains assertions. The sample project https://github.com/algattik/databricks-unit-tests/ contains two demonstration notebooks: The normalize_orders notebook processes a list of Orders and a list of OrderDetails into a joined list, taking into account […]

Updating Variable Groups from an Azure DevOps pipeline

We often need a permanent data store across Azure DevOps pipelines, for scenarios such as: Passing variables from one stage to the next in a multi-stage release pipeline. Any variables defined in a task are only propagated to tasks in the same stage. Storing state between pipeline runs, for example a blue/green deployment release pipeline […]

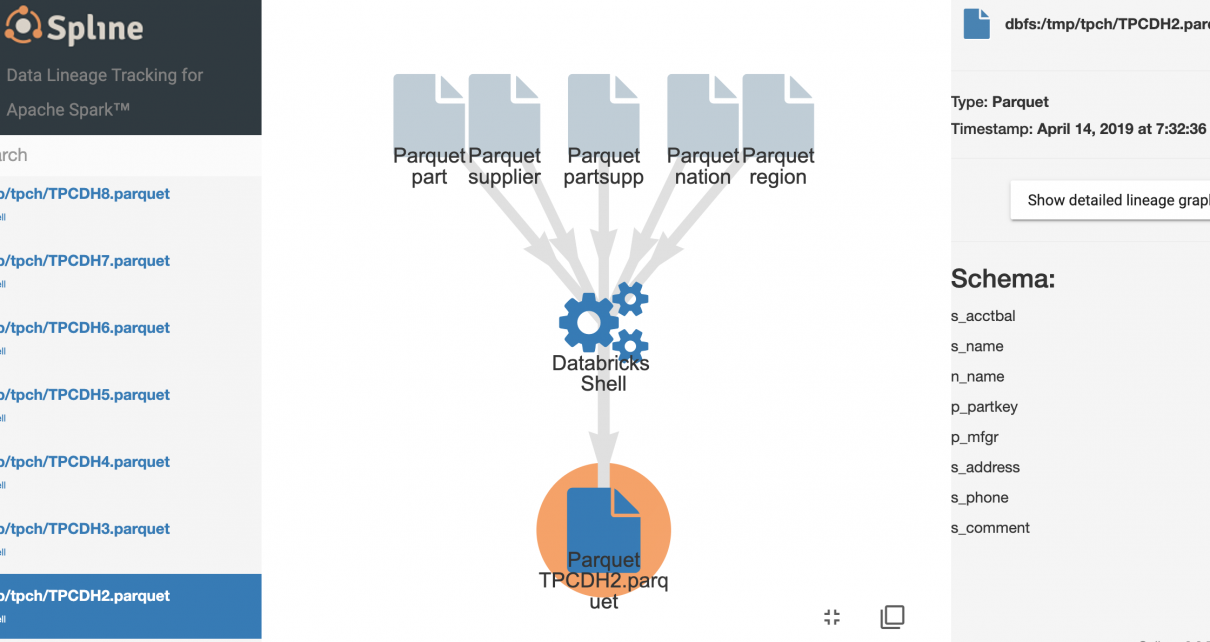

Data Lineage in Azure Databricks with Spline

The Spline open-source project can be used to automatically capture data lineage information from Spark jobs, and provide an interactive GUI to search and visualize data lineage information. We provide an Azure DevOps template project that automates the deployment of an end-to-end demo project in your environment, using Azure Databricks, Cosmos DB and Azure App […]

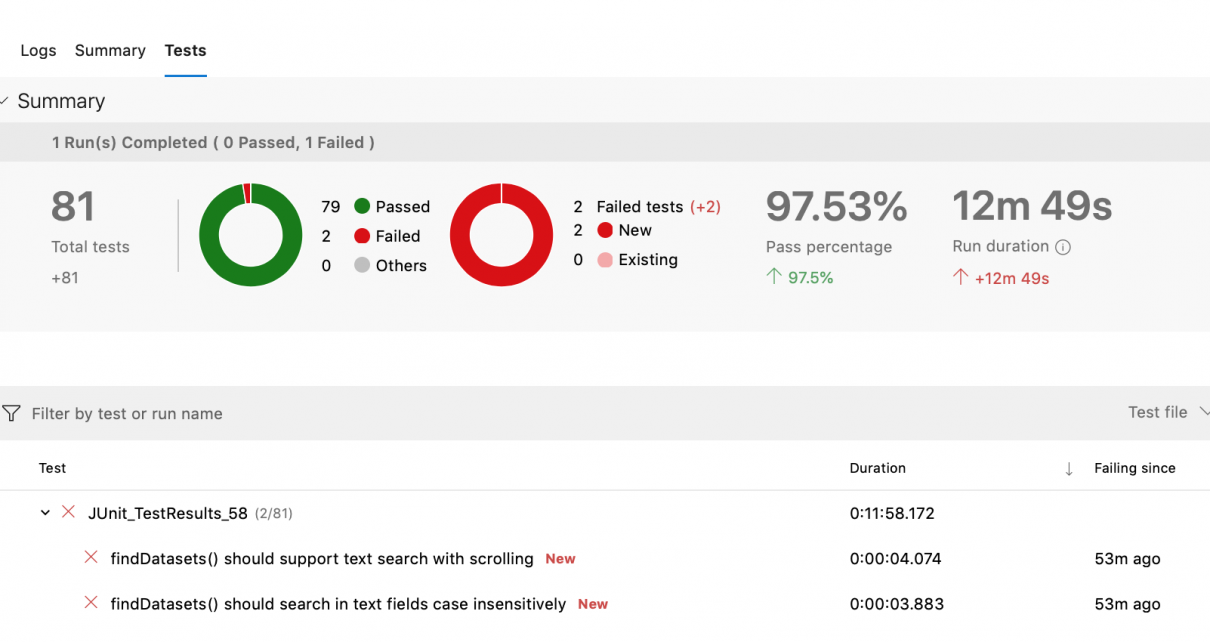

PaaS integration testing with Azure DevOps

Using Azure DevOps pipelines, we can easily spin test environments to run various sorts of integration tests on PaaS resources. Azure DevOps allows powerful scripting and orchestration using familiar CLI commands, and is very useful to automatically spin entire environments using Infrastructure as Code without manual intervention. Sample project In this example, we looked at […]

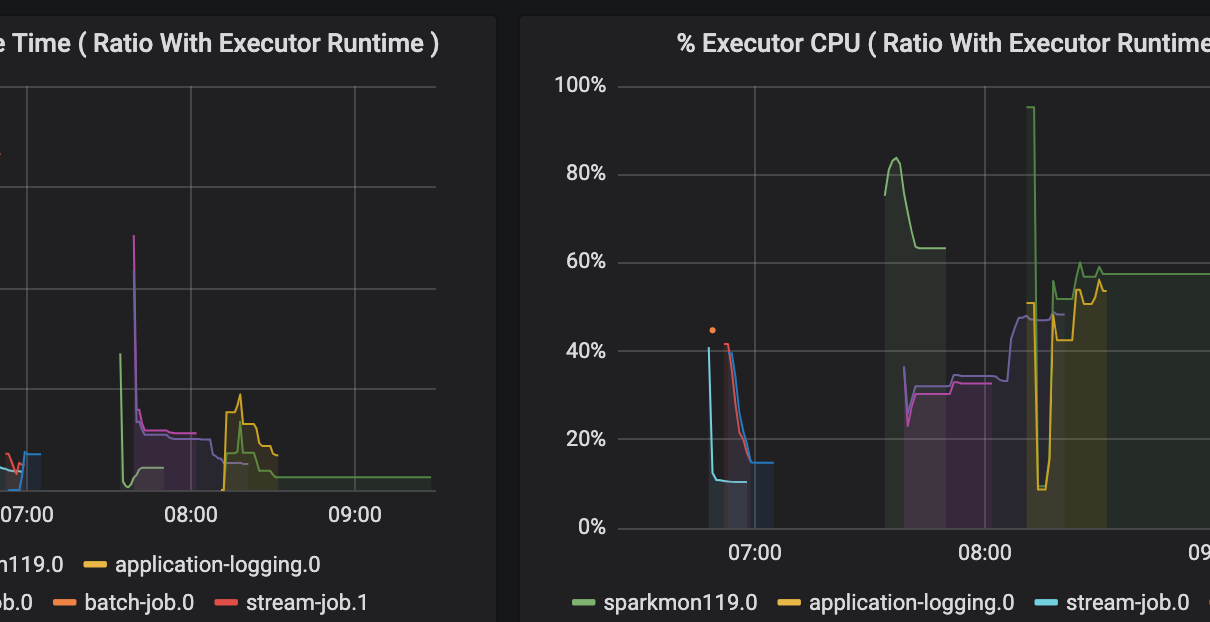

Tutorial: Monitoring Azure Databricks with Azure Log Analytics and Grafana

This is the second post in our series on Monitoring Azure Databricks. See Monitoring and Logging in Azure Databricks with Azure Log Analytics and Grafana for an introduction. Here is a walkthrough that deploys a sample end-to-end project using Automation that you use to quickly get overview of the logging and monitoring functionality. The provided […]

Tutorial: DevOps in Azure with Databricks and Data Factory

This is Part 2 of our series on Azure DevOps with Databricks. Read Part 1 first for an introduction and walkthrough of DevOps in Azure with Databricks and Data Factory. Setting up the environment To get started, you will need a Pay-as-you-Go or Enterprise Azure subscription. A free trial subscription will not allow you to […]

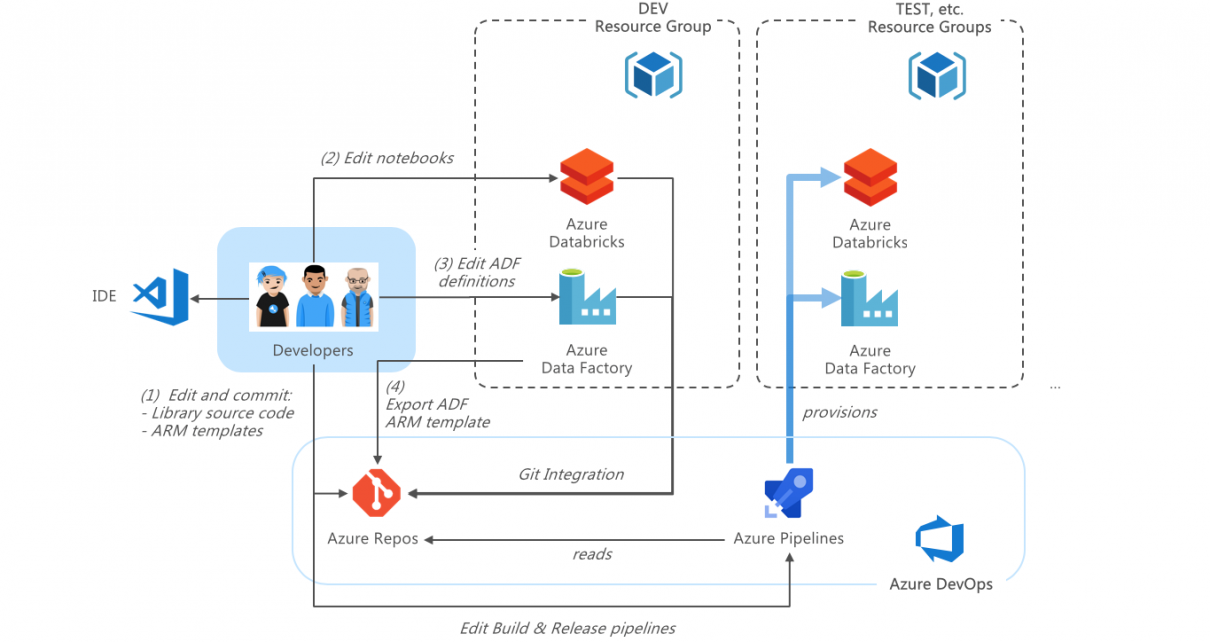

DevOps in Azure with Databricks and Data Factory

Building simple deployment pipelines to synchronize Databricks notebooks across environments is easy, and such a pipeline could fit the needs of small teams working on simple projects. Yet, a more sophisticated application includes other types of resources that need to be provisioned in concert and securely connected, such as Data Factory pipeline, storage accounts and […]