Building simple deployment pipelines to synchronize Databricks notebooks across environments is easy, and such a pipeline could fit the needs of small teams working on simple projects. Yet, a more sophisticated application includes other types of resources that need to be provisioned in concert and securely connected, such as Data Factory pipeline, storage accounts and databases. The accompanying sample project demonstrates viable approaches to Continuous Integration/Continuous Delivery (CI/CD) in data projects.

This is Part 1 of our series on Azure DevOps with Databricks. Part 2 is a tutorial on deploying the sample project in your environment.

Table of Contents

Background

Mature development teams automate CI/CD early in the development process, as the effort to develop and manage the CI/CD infrastructure is well compensated by the gains in cycle time and reduction in defects.

While most references for CI/CD typically cover software applications delivered on application servers or container platforms, CI/CD concepts apply very well to any PaaS infrastructure such as data pipelines. In essence, a CI/CD pipeline for a PaaS environment should:

- Integrate the deployment of an entire Azure environment (comprising for example storage accounts, data factories and databases) within a single pipeline, or a coherent set of interdependent pipelines

- Fully provision environments including resources and notebooks

- Manage service identities as well as credentials

- Run integration and smoke tests

I have been building and operating continuous integration pipelines for over a decade, and am very enthusiastic that the cloud reduces much of the friction observed in traditional environments. To compare some of the advantages the cloud provides with some (true) pain points I have gone through in my previous career:

- The CI/CD infrastructure including and the build agents are available as managed services. Gone are capacity and memory limits on shared environments and the headaches and downtime linked to CI infrastructure upgrades.

- Azure was designed for CI/CD, and all resources can be deployed and provisioned through APIs. Those APIs have enterprise-grade security. No more begging the system administrator to create a virtual machine, which he would do using a GUI, resulting in a slightly different configuration each time… and having to return the next day to ask for more than 50 GB of disk capacity.

- The full set of services and configurations are available. Not being able to test HTTPS in the development environment, as our team could afford a web application firewall only in production, is a now thing of the past.

- Most Azure PaaS resources are free or very inexpensive when deployed in a lower tier and/or no compute is active. With the sample project, many environments can be provisioned for almost no cost, besides the running cost of Azure Databricks clusters., I won’t miss writing a business case for buying more infrastructure so that we could run an extra environment to run demos and stop colliding with the test environment.

This tutorial covers a typical big data workload. It does not cover the modern way of using DevOps to develop machine learning model using Azure Machine Learning. For a tutorial on DevOps with Databricks and Azure ML, see the excellent blog post by my colleague Rene Bremer.

Sample project walkthrough

Project overview

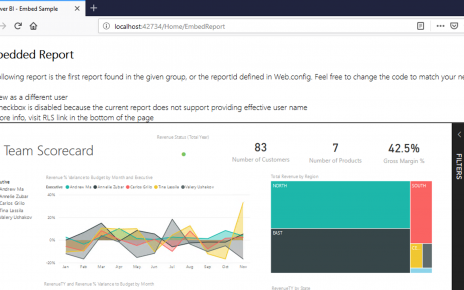

The sample project is an application that predicts the hourly count of bike rentals based on time and weather information. The input data is the UCI Bike sharing dataset, that is provided out of the box in Databricks deployments. The dataset is split and used to train a basic machine learning model and run predictions. The output is simply written as a JSON file in an Azure Data Lake Storage Gen2 (ADLS Gen2) storage account. An Azure Data Factory pipeline with a single activity calls an Azure Databricks notebook to score a a dataset with the model.

The application has been pared down to a minimum in order to make the tutorial easy to follow. A more realistic application would include a data ingestion layer, build more complex models, write the output to a data store such as Azure Cosmos DB, and contain more complex pipelines to train and score models. After understanding the concepts demonstrated with this basic application, you will be able to apply them to other types of resources as well.

Databricks libraries and notebooks

While for simple projects, all the Databricks code might reside in notebooks, it is highly recommended for mature projects to manage code into libraries that follow object-oriented design and are fully unit tested. Frequently, developers will start by prototyping code in a notebook, then factor out the code into a library.

An efficient development environment for Databricks is therefore a combination of notebooks that are developed interactively, and libraries that are developed in a ‘traditional’ manner, e.g. as Maven projects in an IDE. Libraries might contain core business logic and algorithms, and some of that code may also run in other environments. Notebooks might contain integration logic, such as connectivity to upstream data lakes and downstream data marts.

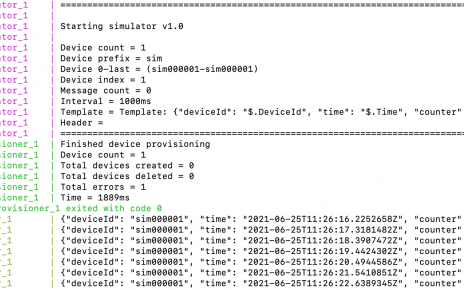

In our sample project, we have written a very simple Java library that computes a machine learning model using Spark MLlib. The library contains a unit test. The code is intentionally simplistic and produces a very poor machine learning model. As we follow DevOps practices, our first job before refactoring any code is to create a CI/CD pipeline so that we can control and measure any changes we make.

The sample project also contains a few notebooks, with the integration logic in order to prepare three subsets from a sample dataset, compute the model by calling the library, score it and apply it to the holdout subset. There is also an integration test notebook that runs the other notebooks in sequence and verifies that they can be called in a pipeline.

Azure Data Factory

Azure Data Factory is often used as the orchestration component for big data pipelines. It might for example copy data from on-premises and cloud data sources into an Azure Data Lake storage, trigger Databricks jobs for ETL, ML training and ML scoring, and move resulting data to data marts. Data Factory provides a graphical designer for ETL jobs with Data Flow. It also allows more powerful triggering and monitoring than Databricks’ in-built job scheduling mechanism.

Azure Data Factory enables great developer productivity, as pipelines can be designed visually, and definitions are automatically synchronized thanks to in-built Git integration.

CI/CD with Azure Data Factory has some constraints, however. The assets (such as linked services and pipelines) are stored in Git in independent files, and their interdependencies are not explicitly modeled. Therefore, they cannot be simply merged into an ARM template and deployed. The documentation suggests a manual process where an ARM template is exported from DEV and imported manually into the other environments.

Manual operations on CI/CD environments is an unappealing proposition. In the sample project, we partially implement the approach proposed in the Azure Data Factory documentation. The developer must export the ARM template from the DEV environment and put the ZIP file in source control. The deployment of the ZIP file to the TEST environment is then fully automated.

That is not ideal, as the ZIP file exported from DEV shouldn’t be in source control. We could ask the user to upload it as a manual step in the release pipeline, or manage it as an artifact, but that would cause friction and is error-prone as we can easily lose the link with revisions and branches.

As an alternative, we provide a sample script that generates an ARM template. The script has the advantage of fully automating provisioning and avoiding maintaining generated artifacts in source control, but it requires manually maintaining the list of data factory resources and their logical deployment order. That script is not used in the sample Release pipeline, but you can replace the default Data Factory provisioning script with it.

ARM templates

The resources to be deployed are maintained in one or several ARM templates (JSON definition files). The base for those templates can be easily generated from the Azure portal. In our sample project, we have a single ARM template that uses parameters in order to deploy resources under different resource groups and names in the various environments.

Azure Databricks

The deployment of an Azure Databricks workspace can be automated through an ARM template. However, doing CI/CD with Databricks requires the generation of a Personal Access Token (PAT) which is a manual operation.

To simplify the tutorial, we are not deploying Azure Databricks workspaces through automation. We use a single Databricks workspace for both DEV and TEST environments. You must create an Azure Databricks workspace and manually generate a PAT and provide it to the Release pipeline.

You can easily modify the project to fit your preferred team setup. For example, you might have different Databricks workspaces for different stages, and/or one workspace per developer.

For simplicity, in the tutorial, you must provide the PAT as a Variable in the Release pipeline, and the pipeline stores it into Azure Key Vault to be retrieved by Azure Data Factory. That is insecure as it exposes the token. In a production setup, you should change that logic to set the PAT in the Key Vault though Azure Portal or CLI, and retrieve its value from the Key Vault in the Release pipeline.

Secrets and managed identities

Azure Data Factory can conveniently store secrets into Azure Key Vault. In the sample project, we use Key Vault to store the Personal Access Token for Azure Databricks. As Azure Data Factory supports managed identities, granting access merely merely means creating an access policy in the ARM template.

Azure Databricks supports two mechanisms for storing secrets. In the sample projects, we create a Databricks-backed secret scope and grant full permission to all users. You can easily modify the provisioning script to restrict permissions, if you are using the Azure Databricks Premium tier).

You can also use an Azure Key Vault-backed secret scope, but you will have to create it manually as the Databricks REST API does not currently allow automating it. Create a separate Azure Key Vault, and a Databricks secret scope named bikeshare. The provisioning script will not overwrite the scope if it already exists.

In the sample project, we use the Databricks secret scope to store the Storage Access Key for the ADLS Gen2 storage account where we will output the result of our machine learning predictions. You could modify this in order to use a service principal instead. You will have to provision the service principal and grant it access to the storage folder the job will write into.

CI/CD pipeline

Git repository

You can use Azure Repos or GitHub as source repository.

Build pipeline

Our first pipeline in Azure DevOps is a build pipeline that retrieves the project files from the Git source repository, builds the Java project, and publishes an artifact containing the compiled JAR as well as all files from the source repository needed for the release pipeline (such as notebooks and provisioning scripts).

Release pipeline

The second pipeline in the sample project is a release pipeline that uses the artifact produced by the Build pipeline. To keep the tutorial simple, the sample Release pipeline has only two stages, DEV and TEST. You can easily clone the TEST stage to provision additional environments, such as UAT, STAGING and PROD, to fit your preferred workflow.

The job for the DEV stage provisions a DEV environment (resource group) from scratch (expect for the Azure Databricks workspace, as discussed above). The Azure Data Factory is created, but not provisioned with definitions (for linked services, pipelines etc.) and is therefore empty when the pipeline completes. The reason for that is that in DEV, we will link the Data Factory to source control, which will keep the definitions synchronized.

The job for the TEST stage also provisions an environment from scratch. In addition to running the same tasks as in DEV, we provision the definitions into Azure Data Factory. The reason for that is that we do not want to link the Data Factory in the TEST environment to source control (we want to fully control our test and staging environments through our CI/CD pipelines). Then, as a last step we run an integration test as a Databricks notebook job.

Note that the coverage of our integration test does not encompass our entire infrastructure, as Azure Data Factory is not exercised. A fuller integration test should also test that we can trigger an Azure Data Factory job that runs Databricks notebooks.

Deployment scripts

The tasks of the Release pipeline are written in Bash scripts. We use extensively the Azure CLI as well as the Databricks CLI. We could also use PowerShell, as well as the PowerShell Module for Databricks and/or the Databricks Script Deployment Task by Data Thirst.

Parameters for the Deployment scripts are set as variables of the release pipeline. Those are passed to the deployment scripts as environment variables. The variables DATABRICKS_HOST and DATABRICKS_TOKEN are recognized by the Databricks CLI. Variables such as the name of Azure resources are set to distinct values for the different stages, to avoid collisions. You can modify this setup to fit your organization’s resource naming convention and reduce the number of variables to be managed.

Build and release agents

In our pipelines, we use Microsoft-hosted agents for build and release. This is a very convenient as Azure DevOps provides us with a throwaway virtual machine for every run for free (up to a monthly limit).

If we wanted to use Virtual Network and Firewall isolation to protect our resources (such as Key Vault and Storage), we could use a self-hosted agent, i.e. run the job on a virtual machine within our virtual network.

Conclusion

The sample project and this walkthrough illustrate how CI/CD can be used to orchestrate the deployment, provisioning and staging of entire environments including compiled code, Azure Databricks and other Azure resources. The sample project is not meant to provide a recommended framework, but simply to showcase ways to implement CI/CD concepts with Azure PaaS resources using the tooling available.

We run CI/CD pipelines in Azure DevOps as it is available for free to open source teams and to small teams, and you can be running in seconds since it is a managed service. You can however deploy this project with another CI/CD environment, such as Jenkins, Bamboo or TeamCity.

While deploying and provisioning resources is quite easy thanks to Azure Resource Manager templates and APIs, the need to account for service authentication and manage associated secrets adds a layer of complexity. Some

services support managed identities, others not. As the Azure platform evolves, Azure Active Directory authentication, managed identities and Key Vault integration are being rolled out to ever more services, this is

becoming increasingly streamlined.

The additional complexity and maintenance effort introduced by deployment and testing automation should not be underestimated. There is a reasonable level of automation for each project and team. DevOps is a journey, and you should start with simple configurations until you are fully familiar with automation, even if that means managing certain resources manually.

In this tutorial, we are not covering branching and merging. Developing in a single branch might be sufficient for small teams working on simple projects. With more complex setups, it is worthwhile to set up a strategy where changes are developed on branches and merged only after they have been validated. Different branching and merging strategies are available, and you should adapt your setup to align with your strategy and team culture.

Proceed to Part 2, a tutorial on deploying the sample project in your environment.