Until Azure Storage Explorer implements the Selection Statistics feature for ADLS Gen2, here is a code snippet for Databricks to recursively compute the storage size used by ADLS Gen2 accounts (or any other type of storage). The code is quite inefficient as it runs in a single thread in the driver, so if you have millions of files you should multithread it. You can even take the challenge of parallelizing it in Spark, although recursive code in Spark is challenging since only the driver can create tasks.

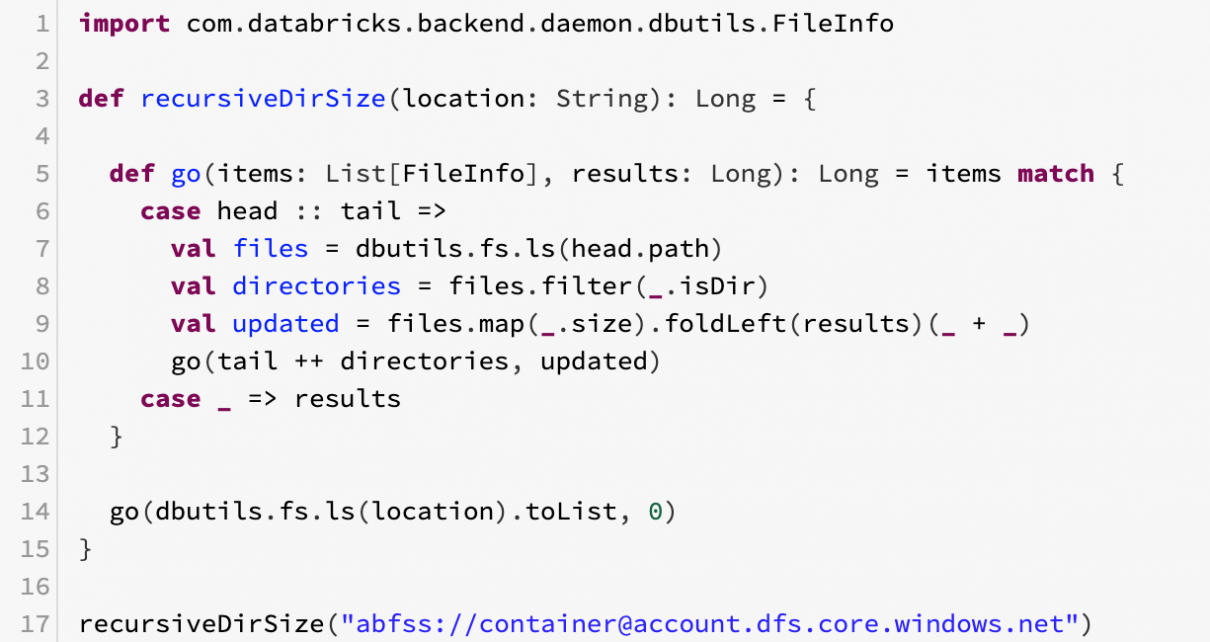

import com.databricks.backend.daemon.dbutils.FileInfo

def recursiveDirSize(location: String): Long = {

def go(items: List[FileInfo], results: Long): Long = items match {

case head :: tail =>

val files = dbutils.fs.ls(head.path)

val directories = files.filter(_.isDir)

val updated = files.map(_.size).foldLeft(results)(_ + _)

go(tail ++ directories, updated)

case _ => results

}

go(dbutils.fs.ls(location).toList, 0)

}

recursiveDirSize("abfss://container@account.dfs.core.windows.net")